on

Kubernetes on Digital Ocean: learnings from using kops

Being a user of the Digital Ocean managed Kubernetes service for a while now, I decided to explore a home made solution to see if I can get more benefits by having more control over the overall cluster management and administration, and also to see if I can cut some costs from the cloud bills. When it comes to installing your own Kubernetes cluster, it seems like the most adopted and well supported solutions are kops and kube-spray. Since kops has direct support for DO and using kube-spray would involve more work setting up the cloud controller manager, in addition to the many other components required to support DO, like the CSI plugin etc… kops seemed like a better fit. By doing the initial information gathering, I also discovered that kops has a quite active community on K8 slack, which was encouraging. The only thing that was bothering me is that kops DO support is still beta, so bugs should be expected (yes I did encounter some annoying ones), but as they say, if you never try you will never know.

Initial scouting

Taking a look at the requirements to install Kubernetes, things seem dead simple. The only things that need a bit of preparation are the DNS domain, and also the DO spaces bucket (equivalent to AWS S3) which is required by kops to store the cluster state. The DO spaces storage service adopts a pay as you go scheme, but unlike AWS they have a monthly 5$ base fee in addition to the storage consumption. Also, DO spaces buckets do not support versionning, which is quite important in our case, since we want to keep track of the changes made to the cluster state. I was wondering if I could use a AWS S3 bucket as a state store regardless of the fact that the cluster will be installed in DO. The answer is yes.

Cool hack: using AWS S3 instead of DO spaces (Optional)

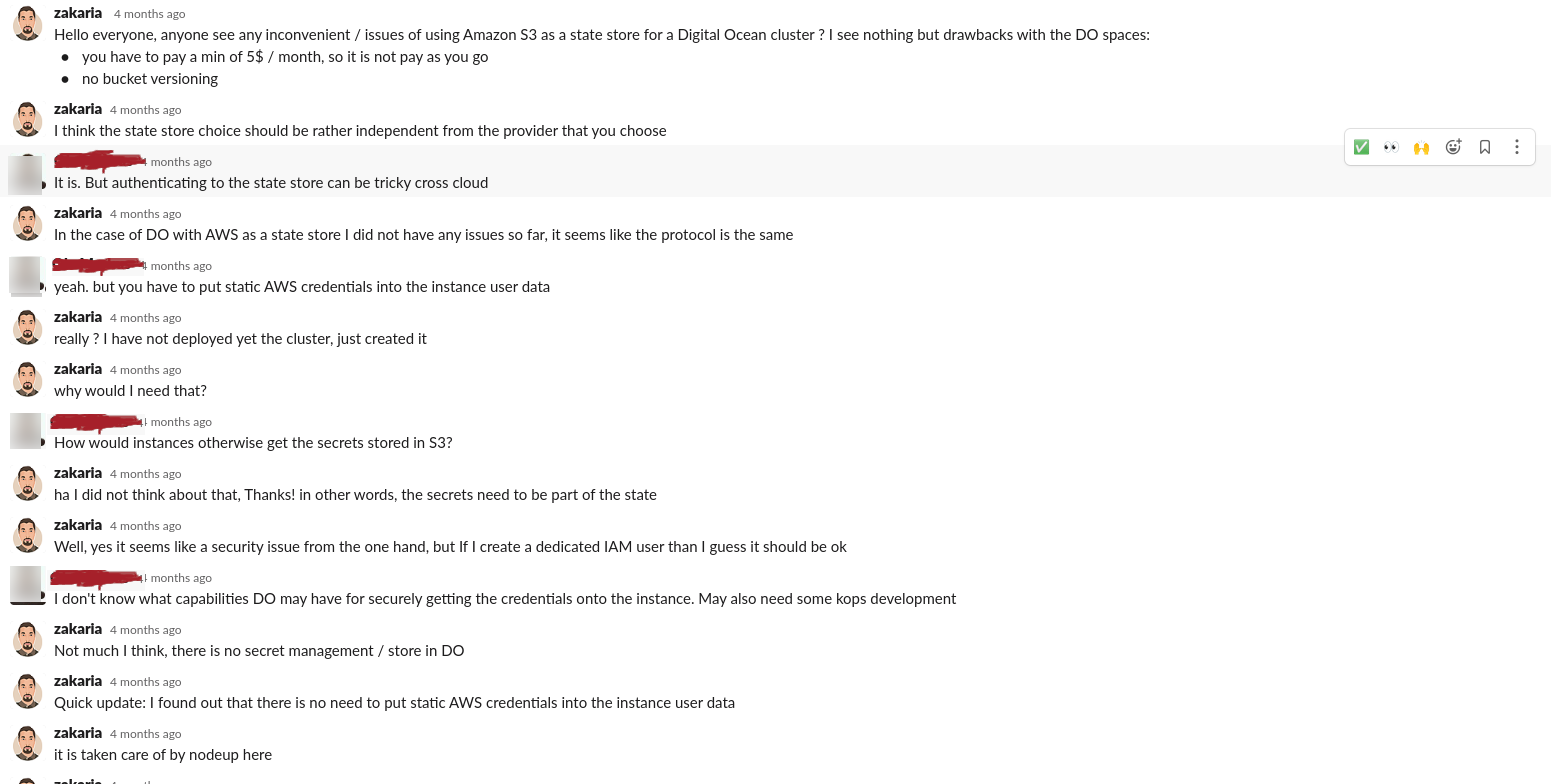

I was unwilling to use the Digital Ocean spaces although my cluster is to be installed there, so I asked on the kops slack channel:

After some back and fourth with the kops team, I found out that kops is using the same client to talk to both AWS S3 and DO spaces endpoints because they have similar protocols (for more details: https://github.com/kubernetes/kops/blob/master/util/pkg/vfs/context.go#L319, https://github.com/kubernetes/kops/blob/master/util/pkg/vfs/context.go#L301) and therefore just by pointing the necessary env variables to my S3 bucket was enough to make kops use S3 as a state store. Setting S3_ENDPOINT is important because it allows kops to know that we are using an S3 compatible state store as you can see here:

https://github.com/kubernetes/kops/blob/master/nodeup/pkg/bootstrap/install.go#L111

so my config was something similar to this:

KOPS_STATE_STORE=s3://kops-bucket-state-store

DIGITALOCEAN_ACCESS_TOKEN=token

S3_ENDPOINT=s3.eu-central-1.amazonaws.com

S3_REGION=eu-central-1

S3_ACCESS_KEY_ID=the_access_key

S3_SECRET_ACCESS_KEY=the_secret_access_key

Cool hack2: using my existing AWS Route53 domain (Optional)

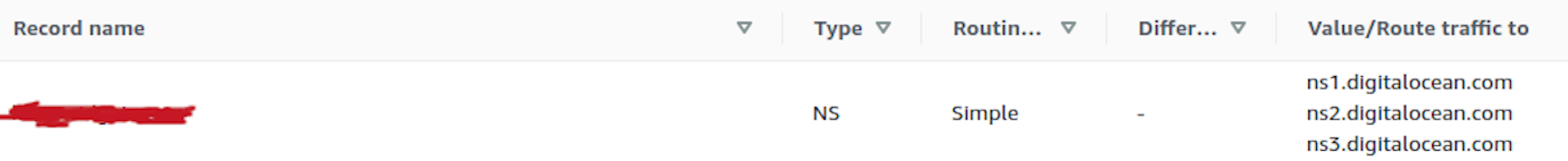

Another important setting that needs to be configured before spinning the cluster up is a domain name. The domain gives somehow the cluster its identity and is used to talk to the API server using tools such as kubectl. Because I have already many domains in AWS, I wanted to see if I can use one of my AWS domains. Unlike AWS and GCP, DO DNS service is not a registrar in itself, so you need to have a domain already registered, and then you can have it managed by DO by adding some DNS records. I checked out the docs, but AWS was not among the long list of providers documented (maybe because DO folks hate AWS), however the procedure seems to be somehow similar in all of them. I created a hosted zone in AWS for a subdomain of one my existing domains, let’s say k8.mydomain.com and replaced the DO entries in the NS record:

Let’s get this cluster up:

After getting all the cluster requirements prepared, it’s time to get it up and running. The cluster can be created with one command:

kops create cluster --cloud=digitalocean --name=mycluster.k8.mydomain.com --networking=flannel --master-count=3 --api-loadbalancer-type=public --node-size=s-2vcpu-4gb --image=debian-11-x64 --node-count=2 --zones=fra1 --ssh-public-key=~/.ssh/kops.pub

As you can see, kops provides several options for customizing the number of master and worker nodes and also the Droplet type. To be able to run in HA mode, kops requires that the number of master nodes is even which means that minimum number of master nodes for a HA control plane is 3. Another important parameter is the --networking parameter, which allows you to specify the CNI plugin for the cluster. kops supports many out of the box like weave, flannel, cilium and many more. For my case, I chose flannel because it’s one of the most mature and adopted ones. There are many criteria to consider when choosing a CNI for a K8 cluster, but let’s keep focused on cluster creation for now.

A successfull run will lead to an output similar to the following:

Will create resources:

Droplet/master-fra1-1.masters.mycluster.k8.mydomain-com

Region fra1

Size s-2vcpu-4gb

Image debian-11-x64

SSHKey 62:53:c1:58:45:ba:e8:02:76:d7:b6:23:eb:02:2f:8f

Tags [KubernetesCluster:mycluster-k8-mydomain-com, k8s-index:etcd-1, KubernetesCluster-Master:mycluster-k8-mydomain-com, kops-instancegroup:master-fra1-1]

Count 1

Droplet/master-fra1-2.masters.mycluster.k8.mydomain-com

Region fra1

Size s-2vcpu-4gb

Image debian-11-x64

SSHKey 62:53:c1:58:45:ba:e8:02:76:d7:b6:23:eb:02:2f:8f

Tags [KubernetesCluster:mycluster-k8-mydomain-com, k8s-index:etcd-2, KubernetesCluster-Master:mycluster-k8-mydomain-com, kops-instancegroup:master-fra1-2]

Count 1

Droplet/master-fra1-3.masters.mycluster.k8.mydomain-com

Region fra1

Size s-2vcpu-4gb

Image debian-11-x64

SSHKey 62:53:c1:58:45:ba:e8:02:76:d7:b6:23:eb:02:2f:8f

Tags [KubernetesCluster:mycluster-k8-mydomain-com, k8s-index:etcd-3, KubernetesCluster-Master:mycluster-k8-mydomain-com, kops-instancegroup:master-fra1-3]

Count 1

Droplet/nodes-fra1.mycluster.k8.mydomain-com

Region fra1

Size s-2vcpu-4gb

Image debian-11-x64

SSHKey 62:53:c1:58:45:ba:e8:02:76:d7:b6:23:eb:02:2f:8f

Tags [KubernetesCluster:mycluster-k8-mydomain-com, kops-instancegroup:nodes-fra1]

Count 2

Keypair/apiserver-aggregator-ca

Subject cn=apiserver-aggregator-ca

Issuer

Type ca

LegacyFormat false

Keypair/etcd-clients-ca

Subject cn=etcd-clients-ca

Issuer

Type ca

LegacyFormat false

Keypair/etcd-manager-ca-events

Subject cn=etcd-manager-ca-events

Issuer

Type ca

LegacyFormat false

Keypair/etcd-manager-ca-main

Subject cn=etcd-manager-ca-main

Issuer

Type ca

LegacyFormat false

Keypair/etcd-peers-ca-events

Subject cn=etcd-peers-ca-events

Issuer

Type ca

LegacyFormat false

Keypair/etcd-peers-ca-main

Subject cn=etcd-peers-ca-main

Issuer

Type ca

LegacyFormat false

Keypair/kube-proxy

Signer name:kubernetes-ca id:cn=kubernetes-ca

Subject cn=system:kube-proxy

Issuer cn=kubernetes-ca

Type client

LegacyFormat false

Keypair/kubelet

Signer name:kubernetes-ca id:cn=kubernetes-ca

Subject o=system:nodes,cn=kubelet

Issuer cn=kubernetes-ca

Type client

LegacyFormat false

Keypair/kubernetes-ca

Subject cn=kubernetes-ca

Issuer

Type ca

LegacyFormat false

Keypair/service-account

Subject cn=service-account

Issuer

Type ca

LegacyFormat false

LoadBalancer/api-mycluster-k8-mydomain-com

Region fra1

DropletTag KubernetesCluster-Master:mycluster-k8-mydomain-com

ForAPIServer false

ManagedFile/cluster-completed.spec

Base s3://kops-bucket-state-store/mycluster.k8.mydomain-com

Location cluster-completed.spec

ManagedFile/etcd-cluster-spec-events

Base s3://kops-bucket-state-store/mycluster.k8.mydomain-com/backups/etcd/events

Location /control/etcd-cluster-spec

ManagedFile/etcd-cluster-spec-main

Base s3://kops-bucket-state-store/mycluster.k8.mydomain-com/backups/etcd/main

Location /control/etcd-cluster-spec

ManagedFile/mycluster.k8.mydomain-com-addons-bootstrap

Location addons/bootstrap-channel.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-core.addons.k8s.io

Location addons/core.addons.k8s.io/v1.4.0.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-coredns.addons.k8s.io-k8s-1.12

Location addons/coredns.addons.k8s.io/k8s-1.12.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-digitalocean-cloud-controller.addons.k8s.io-k8s-1.8

Location addons/digitalocean-cloud-controller.addons.k8s.io/k8s-1.8.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-dns-controller.addons.k8s.io-k8s-1.12

Location addons/dns-controller.addons.k8s.io/k8s-1.12.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-kops-controller.addons.k8s.io-k8s-1.16

Location addons/kops-controller.addons.k8s.io/k8s-1.16.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-kubelet-api.rbac.addons.k8s.io-k8s-1.9

Location addons/kubelet-api.rbac.addons.k8s.io/k8s-1.9.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-limit-range.addons.k8s.io

Location addons/limit-range.addons.k8s.io/v1.5.0.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-networking.flannel-k8s-1.12

Location addons/networking.flannel/k8s-1.12.yaml

ManagedFile/mycluster.k8.mydomain-com-addons-rbac.addons.k8s.io-k8s-1.8

Location addons/rbac.addons.k8s.io/k8s-1.8.yaml

ManagedFile/kops-version.txt

Base s3://kops-bucket-state-store/mycluster.k8.mydomain-com

Location kops-version.txt

ManagedFile/manifests-etcdmanager-events

Location manifests/etcd/events.yaml

ManagedFile/manifests-etcdmanager-main

Location manifests/etcd/main.yaml

ManagedFile/manifests-static-kube-apiserver-healthcheck

Location manifests/static/kube-apiserver-healthcheck.yaml

ManagedFile/nodeupconfig-master-fra1-1

Location igconfig/master/master-fra1-1/nodeupconfig.yaml

ManagedFile/nodeupconfig-master-fra1-2

Location igconfig/master/master-fra1-2/nodeupconfig.yaml

ManagedFile/nodeupconfig-master-fra1-3

Location igconfig/master/master-fra1-3/nodeupconfig.yaml

ManagedFile/nodeupconfig-nodes-fra1

Location igconfig/node/nodes-fra1/nodeupconfig.yaml

Secret/admin

Secret/kube

Secret/kube-proxy

Secret/kubelet

Secret/system:controller_manager

Secret/system:dns

Secret/system:logging

Secret/system:monitoring

Secret/system:scheduler

Volume/kops-etcd-1-etcd-events-mycluster-k8-mydomain-com

SizeGB 20

Region fra1

Tags {etcdCluster-events: etcd-1, k8s-index: etcd-1, KubernetesCluster: mycluster-k8-mydomain-com}

Volume/kops-etcd-1-etcd-main-mycluster-k8-mydomain-com

SizeGB 20

Region fra1

Tags {etcdCluster-main: etcd-1, k8s-index: etcd-1, KubernetesCluster: mycluster-k8-mydomain-com}

Volume/kops-etcd-2-etcd-events-mycluster-k8-mydomain-com

SizeGB 20

Region fra1

Tags {etcdCluster-events: etcd-2, k8s-index: etcd-2, KubernetesCluster: mycluster-k8-mydomain-com}

Volume/kops-etcd-2-etcd-main-mycluster-k8-mydomain-com

SizeGB 20

Region fra1

Tags {k8s-index: etcd-2, KubernetesCluster: mycluster-k8-mydomain-com, etcdCluster-main: etcd-2}

Volume/kops-etcd-3-etcd-events-mycluster-k8-mydomain-com

SizeGB 20

Region fra1

Tags {KubernetesCluster: mycluster-k8-mydomain-com, etcdCluster-events: etcd-3, k8s-index: etcd-3}

Volume/kops-etcd-3-etcd-main-mycluster-k8-mydomain-com

SizeGB 20

Region fra1

Tags {etcdCluster-main: etcd-3, k8s-index: etcd-3, KubernetesCluster: mycluster-k8-mydomain-com}

Must specify --yes to apply changes

Cluster configuration has been created.The output lists all the resources that will be created by kops, and also saves the state in the bucket. At this point, our cluster has not yet been created, so we need to run the update command to reconcile the cluster state with our desired state stored in the bucket:

kops update cluster --name mycluster.k8.mydomain.com --yes --admin

After a successfull execution you shoud see something similar:

I0805 19:21:18.864775 420833 update_cluster.go:326] Exporting kubeconfig for cluster

kOps has set your kubectl context to mycluster.k8.mydomain.com

Cluster is starting. It should be ready in a few minutes.

Oops, DNS controller is crashing:

Once the cluster is created, I noticed something weird: The API server was unreachable through kubectl. Normally, after a kops installation, the kube context is configured and the communication with the API server should be possible. I checked the DNS entries and noticed right away that there was no DNS records for the public api server load balancer. As we saw before, kops creates a load balancer with the following DNS pattern api.{cluster_domain}. However, I did not find any DNS entry for api.mycluster.k8.mydomain.com, and this seemed to be a very early misfortune. The first idea I got was to create manually an entry that points to the load balancer ip address. In this way, I should be able to hit the cluster using kubectl and figure out what was going on. Taking a look at the pods in the kube-system namespace I saw that:

dns-controller-7dbdbc467f-zg2x7 0/1 CrashLoopBackOff 10 (20s ago) 28m

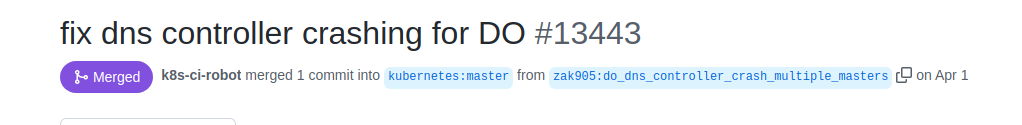

The DNS controller, which is a kops component responsible for creating DNS records for both the internal and public API server when the cluster is created and for updating them in case there is a change, was crashing. I checked the logs of the dns-controller and discussed the issue with the kops dev team, and we came to the conclusion that this was a bug that needed to be fixed. After digging into kops source code, I was able to find where the pain point was, and submitted a fix to the project which was accepted by the kops team. For details, you can checkout the issue description here: https://github.com/kubernetes/kops/issues/13441 and the PR with the fix: https://github.com/kubernetes/kops/pull/13443

Luckily now, if you are using versions > 1.23.0 (which is recommended), you will not encounter this issue. No need to thank me, you are welcome.

Wait a sec, there is no CSI plugin installed:

Another annoying thing I noticed when I started deploying workloads is that there was no CSI installed for Digital Ocean, so whenever there was a volume creation, things got stuck. It does not take much to install the necessary components to get volumes working, but it would have been nice to have the plugin already installed. I discussed the matter with the kops team and we figured out that this is indeed something reasonable to have installed and managed by kops. I opened an issue in the kops project which was resolved quickly by kops maintainers. More details here: https://github.com/kubernetes/kops/issues/13480

Luckily any new kops installations with versions > 1.23.0 will have the CSI plugin installed and managed by kops.

Comparing between digital ocean managed Kubernetes:

So now that we have our cluster up and running and all the issues resolved, it’s worth doing a comparison between the DO managed solution and kops in terms of pricing.

DO managed K8 provides a free control plane (without HA), so only the cost of the worker nodes is taken into account. In case a HA control plane is needed, an additional monthly 40$ should be paid.

In order to create a similar cluster to the one above we need:

- 5 worker nodes (2 vCPUs, 4GB RAM): 24$ x 5 = 120$

- HA control plane: 40$

- Total: 160$/month

(prices are as of the date this post is published)

Let’s compare with the resources created by kops:

- 5 nodes (3 master and 2 worker nodes): 24$ x 5 = 120$

- 6 x 20 GB volumes for etcd events: 6 x 2$ = 8$

- load balancer for the API server: 12$

- if you are not using S3 (as I mentionned before), you need to use the DO spaces which cost 5$

- Additionally you need to have a domain. The cheapest ones can be bought for around 11$/year . If you have an existing one, you can for sure use a subdomain.

- Total: between 140$/month and 146$/month

Which means that with kops you could save roughly between 20$ to 14$ monthly on each cluster.

From a resources perspective, DO managed solution worker nodes has roughly 2.5 GB out of the 4GB RAM available for usage which makes about ~12.5GB in total of RAM available. Kops on the other hand had about %45 of the overall RAM already used by the different kube-system components, so in total 20GB was available but only ~11GB was usable to deploy workloads.

Besides the cost, there are also some noted difference concerning the level of control. Here are some notes I gathered:

| Kops | DO Managed K8 | |

|---|---|---|

| Upgrades | You have full control over the version | Defined by DO release cycle, so you may have to wait get the latest version. For example, as of the time this post is written kubernetes lastest version is 1.24.3 while DO's latest supported version 1.23.9 |

| Access to nodes | You can ssh to the nodes, and apply any change you want (e.g updating packages, configuring log rotation...etc) | Technically you can. But you should not. The digital ocean agent will reverse any change you make to the nodes |

| Control over Kubernetes components (kube-apiserver, kube-proxy, kubelet...) | You have control over all the components, and you can also add or change arguments for each component through kops specs. | Besides kube-proxy, you have no access to the other kubernetes components. In fact, they are not visible at all. |

| CNI plugin choice | You have the choice between different CNI plugins like weave, flannel, cilium...and more | uses cilium and there no way to switch to something else |

Wrap up:

kops is an interesting tool that is worth giving a shot. It was aimed initially for AWS but now expanded to the other cloud providers like DO. We have seen how easy it is to spin up a cluster. Because DO support is still in beta in kops, some bugs may occur when operating the cluster. This could be annoying in production, so for now, I would recommend using it for non critical clusters. Kops has also a quite active and responsive team on Slack: I’d give kops 5 stars when it comes to getting support or having a fix merged. Finally, we have seen the trade offs with regard to using K8 DO managed service vs. kops. Using kops gives more control over the cluster and save some bucks on the cloud bills, but one may need to spend more time and effort in managing the cluster, while the managed DO offering relieves you from any cluster management tasks, a bit of additional cost and loss of control. At the end of the day, time is money, so spending time on cluster management is not free.