on

why using system:masters in your Kubernetes kubeconfig is bad

I wanted to share some thoughts on why using a certificate with system:masters as group (or Organization in certificate terms) in your Kubernetes kubeconfig is a bad idea, especially if you are sharing it with other users. I started recently a DevOps postion, and I was requested to do some account clean up after a developer left. Everything went smooth until I got to the Kubernetes access part during which I fell into a difficult security dilemna: rotating manually the kubernetes CA certificates (it can be quiet painfull), or leaving the Kubernetes cluster vulnerable and expecting the developer to behave professionally.

What happened:

Under some stressfull conditions, and because there was no time to look into tools and ways to generate a tailored kubeconfig with limited access, the developer was provided (some time before I join the company) without thinking about the future consequences with the master or the admin.conf kubeconfig file that is generated when the cluster is created (can be usually found under /etc/kubernetes/admin.conf in the control plane). This kubeconfig not only allow access to all the cluster resources (which is bad for overall security), but also grant unrevokable access forever until the cluster certificates expire. Let’s see why.

The naive approach:

I started the process of disabling access by first creating a new kubeconfig “admin” config file by:

- generating a private key and a certificate file signed with the kubernetes CA and CA key (they can be found under

/etc/kubernetes/sslin the control plane node), using openssl. I choose a brand new group name calledrootand user name calledrootfor the certificate subject using the-subjoption:-subj "/CN=root/O=root" - creating the necessary

ClusterRoleandClusterRoleBindingfor the user and group.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: root

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: root

subjects:

- kind: Group

name: root

roleRef:

kind: ClusterRole

name: root

apiGroup: rbac.authorization.k8s.io- creating a kubeconfig file with new generated certificate and key. This can be done by replacing the

client-certificate-dataandclient-key-datawith the base64 of the content of the certifcate and private key generated earlier. The name of the user need to be updated as well. Thecertificate-authority-datashould be left as-is.

some of the steps are described in details here: https://cloudhero.io/creating-users-for-your-kubernetes-cluster

I tried using the kubeconfig and it worked! Now it was time to delete the rbac for the old kubeconfig and everything should be ok. The next step was to do some reverse engineering to find out the name and the group associated with the old “admin” config, so I grabbed the old file, and did a base64 decode on the client-certificate-data data. Next, I used openssl to get the certificate info. The whole process can be achieved using the following bash terminal command: echo $CERT_DATA | base64 -d | openssl x509 -text -noout. After finding the info related to the Subject Subject: O = system:masters, CN = kubernetes-admin, I headed back to kubernetes to delete any Role/ClusterRole and RoleBinding/ClusterBinding associated with the system:masters group and the kubernetes-admin user. I found a ClusterRole named cluster-admin which I deleted. In principle, all this mess should be now history and the old kubeconfig should be denied access. I did one last access check with the old kubeconfig and to my surprise, the access was always granted. What’s going on ? I was wondering if I have missed a role or clusterrole, so I used a kubectl plugin named access-matrix to make sure I have not. I wasted some time looking for an answer without success. Finally, I decided to ask on the kubernetes slack channel.

Why it does not work:

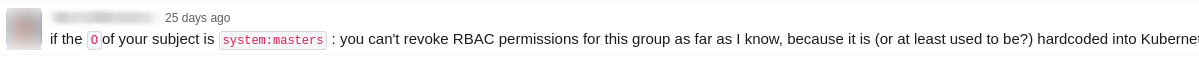

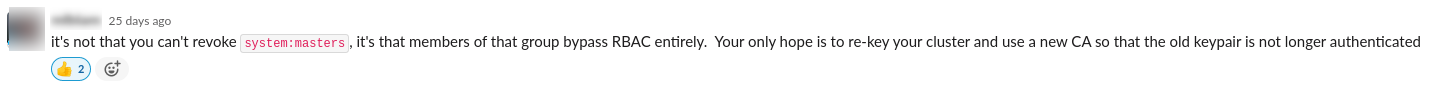

I got some helpful responses from the community (thanks again!), which led me to the conclusion that there was no point in trying harder:

According to the answers above, the group system:masters is not something that you can create and delete, and even if you do, it has literally no effect because it’s hard coded in kubernetes. After digging a bit into the api server code, I found out the location:

https://github.com/kubernetes/apiserver/blob/master/pkg/authentication/user/user.go#L71

Another important information is that the system:masters bypasses the kubernetes security because it’s considered a kind of super user that can do anything. Here is the kubernetes code responsible for doing the check:

https://github.com/kubernetes/kubernetes/blob/master/pkg/registry/rbac/escalation_check.go#L41

I found out also an intersting discussion in a Github issue in the kubeadm project repository: https://github.com/kubernetes/kubeadm/issues/2414.

Some revealing quotes from the discussion:

the admin.conf is like a root password…it must not be shared.

I’d agree that admin.conf should be a break-glass credential, but it may benefit cluster operators to provide a mechanism for that credential to be revoked, given its lifetime.

Take aways:

The admin.conf kubeconfig should not be shared even with people who are meant to run or operate the Kubernetes cluster because it cannot be revoked. There are many helpful tools out there like OpenUnison, permissions-manager, or even kubeadm that can help create tailored kubeconfig files, that can easily be revoked. Also, if you are using a managed Kubernetes with some cloud provider, then the initial provided kubeconfig is usually not the admin.conf, so there is no risk. The company I work for have not yet decided how deal with this issue, but for one thing, we have learned the hard lesson.

interesting article related to the subject: https://blog.aquasec.com/kubernetes-authorization